DL Coursera 1 - NN & DL - W1: Intro to DL

Posted on 31/08/2019, in Machine Learning.This note was taken when I started to follow the specialization Deep Learning on Coursera. You can audit the courses in this specialization for free. In the case you wanna obtain a certification, you have to pay.

Lectures in this week:

tocIn this post

keyboard_arrow_right

Go to Week 2.

Documentation

- Beautifully drawn notes by Tess Ferrandez.

- Next steps?: Taking fast.ai courses series as it focuses more on the practical works (ref).

Welcome

- AI is the new electricity.

- Electricity had once transformed countless industries: transformation, manufacturing, healthcare, communications,…

- AI will now bring about an equally big transformation.

- What you’ll learn:

- (4 weeks) Foundation: NN and DL

- How to build a new network / dl network & train it on data

- Build a system to recognize cat! <– recognize a cat

- (3 weeks) Improving Deep Neural Networks:

- Hyperparameters tuning

- Regularization

- Optimization: momentum armrest prop and the ad authorization algorithms.

- (2 weeks) Structuring your ML project

- It turns out that the strategy for building a machine learning system has changed in the era of deep learning.

- train/test sets come from diff distributions <- frequently happen in DL

- end-to-end DL (when should/shouldn’t?)

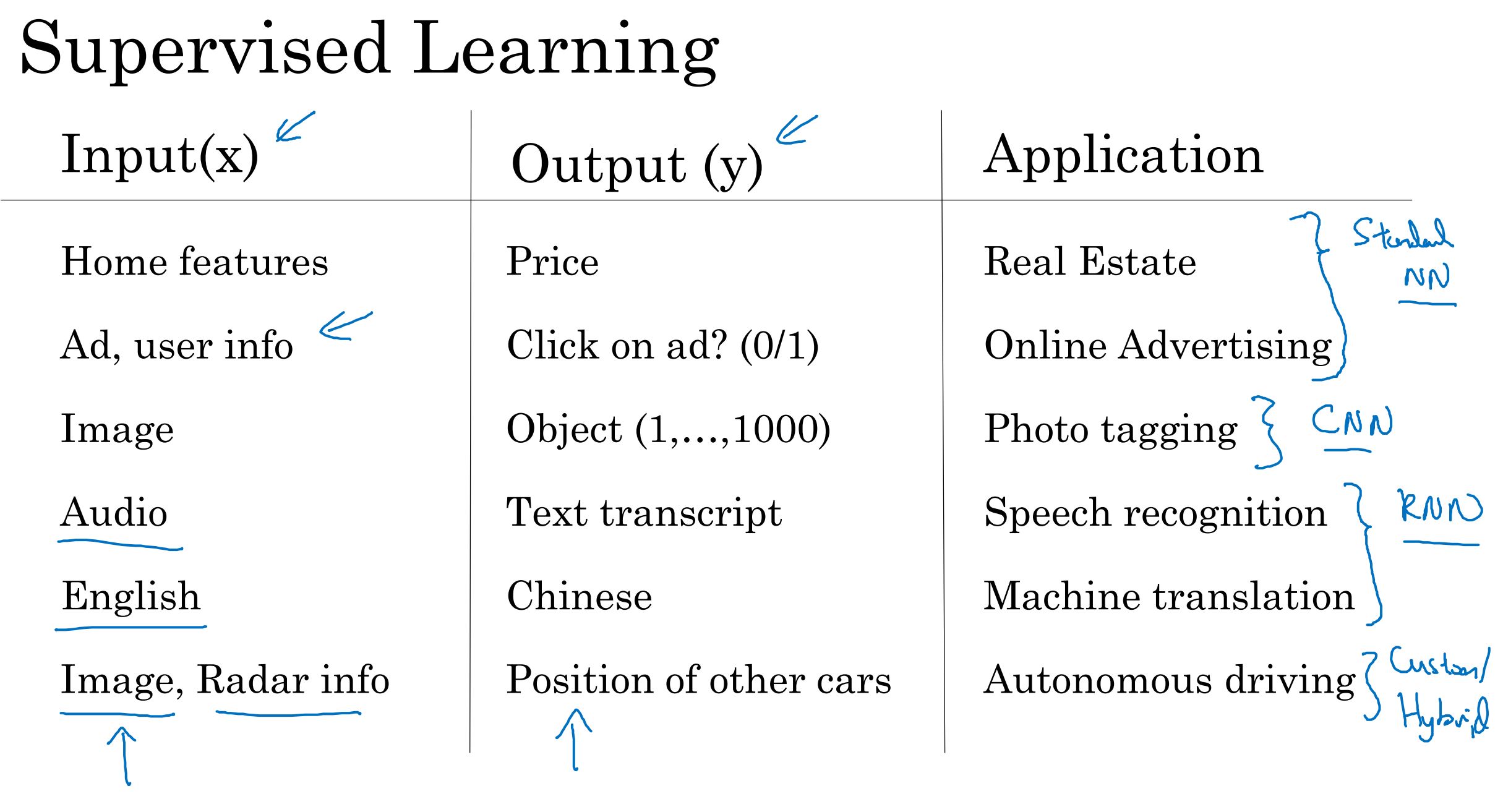

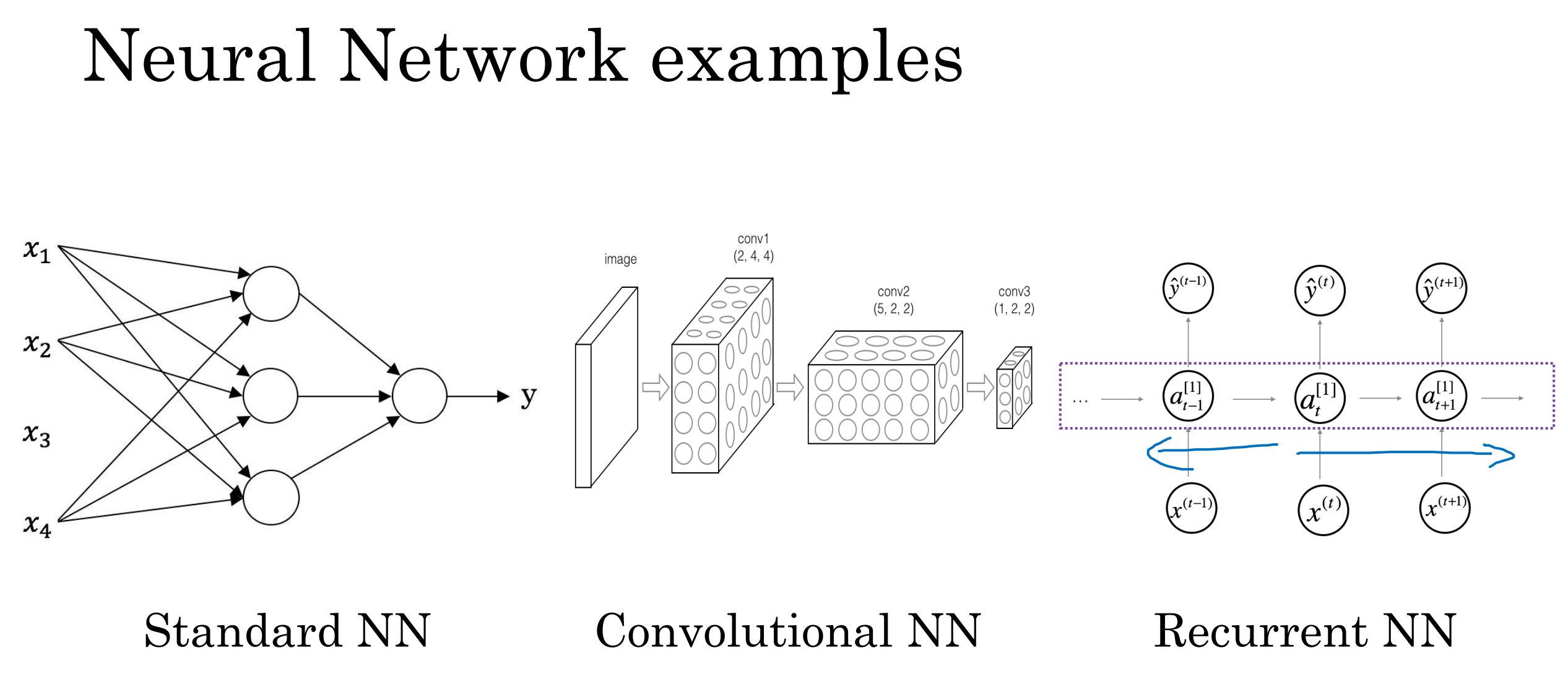

- Convolutional Neural Networks (CNNs) [convolutional: tính chập]

- usually applied to images!

- NLP (building sequence models)

- RNN (Recurrent Neural Network) <– nếu sequence data thì thường dùng cái này!

- LSTM (Long Short Term Memory Model)

- applied to speech recognition / music generation

- NLP = sequence of words

- (4 weeks) Foundation: NN and DL

Introduction to Deep Learning

What is a neural network?

- Lecture notes + Lecture slides

- Depp Learning = training Neural Networks (sometimes very large NN)

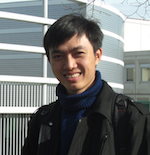

- Housing price prediction

- Very simple NN: ReLU function (Rectified Linear Units)

- y = price, x = size of house

- Multiple NN:

- input layer - hidden layer - output layer

- middle layer is density connected vì mọi inputs đều liên kết với mọi node trong middle layer (không giống cái hình ở trên là có những input không kết nối với các node trong middle layer)

- Very simple NN: ReLU function (Rectified Linear Units)

Supervised Learning with Neural Networks

- Lecture notes + Lecture slides

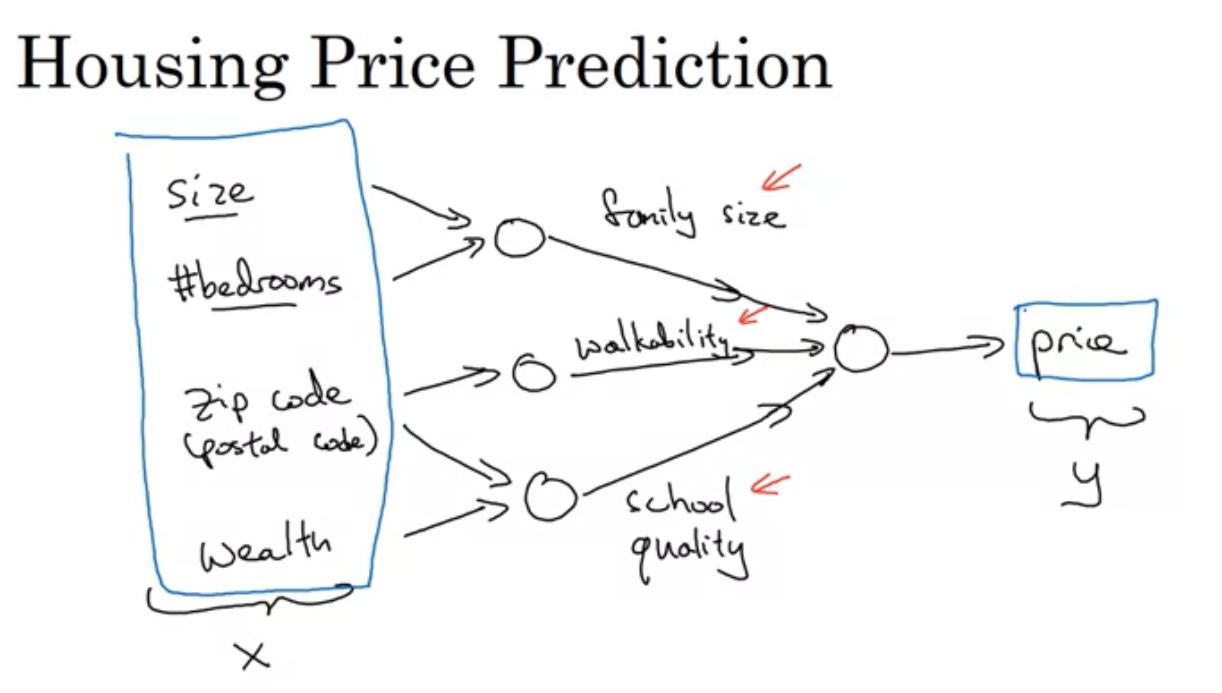

- all the economic value created by neural networks has been through supervised learning.

- Supervised Learning: structured data vs unstructured data

- structured data: each of feature has a very well defined meaning.

- unstructured data: audio, images, words in text <– thanks to NN, DL, computers are now much better at interpreting this type of data.

Why is Deep Learning taking off?

- Lecture notes + Lecture slides

-

Tại sao ý tưởng của DL có từ lâu mà tới giờ nó mới phát triển thật sự như vậy?

- If you wanna hit a very high performance, you need 2 conditions:

- train a bigger network: train a big enough NN in order to take advantage of the huge amount of data <– take to long to train

- throw more data at it: you do need a lot of data <– we often don’t have enough data

- Scale drives DL progress:

- Data

- Computation (CPU, GPU)

- Algorithms

- Ex: changing from sigmoid function to ReLU function make faster

- The process of training a NN is iterative (Idea> Code > Experiment > Idea …): faster computation helps to iterate and improve new algorithm.

About this course & Course Resources

- Week 1: Introduction

- W2: Basics of NN programming

- W3: One hidden layer NN

- W4: Deep NN